Estimated reading time: 9 minutes

The Oxford dictionaries declared “post-truth” word of the year in 2016, reflecting “circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief”. The rise of “fake news” has undoubtedly played a central role in this debate. Although there is much to be concerned about, the title of my public lecture (and this article) is meant to inspire a collective determination to stand tall against the challenges that lie ahead. Yet, in order to understand how to effectively respond to the issue of fake news, I propose an agenda that explores three core themes; 1) identifying the problem, 2) evaluating the societal consequences, and 3) exploring practical solutions.

- IDENTIFYING THE PROBLEM

What is fake news?

Some time ago, I was invited to speak at Wilton Park by the United Nations Special Rapporteur for the protection of freedom of opinion and expression. The meeting was attended by many different parties all attempting to answer a deceptively difficult question; “What is “fake news”? Most attendees agreed that the term is clearly not new. However, no clear definition exists and establishing the boundary conditions of what does and does not constitute “fake news” proved more challenging than anticipated. For example, does simple human error count as “fake news”? How about satire, misinformation or propaganda? Since then, I have given this question quite some thought, and heard many people, including colleagues, use the terms “misinformation”, “propaganda”, and “fake news” interchangeably. Yet, I believe that this deprives the discussion of an important degree of contextual nuance.

Accordingly, I have devised a simple rule of thumb to help explain subtle but important differences between misinformation, disinformation, and propaganda, at least in social-psychological terms. If we think of fake news as varying on a spectrum, then at the very left end we find “misinformation”, which is simply information that is false or incorrect, and includes human error. Misinformation coupled with a clear intent to cause harm or purposefully deceive others can be thought of as “disinformation” (D = M+I). In turn, on the far right end of the spectrum we find “propaganda”, which can be defined as disinformation coupled with an implicit or explicit political agenda (P = D+Pa). From a psychological perspective, this distinction is important because while most people can forgive simple human error, the deliberate intention to cause harm makes it a moral transgression, to which we react much more strongly, for example, think of moral emotions such as outrage.

In other words, I do not believe that the real issues that are at stake include funny memes, satire, or simple errors in news reporting. Instead, what we are concerned about cuts across two different but related challenges. First, there is a rise in efforts to purposefully deceive the public and to undermine people’s ability to form evidence-based opinions on key societal issues. Second, we are faced with navigating a new media environment, one which helps facilitate the unprecedented spread of false misinformation. In contrast to most of human history, much of the Western world currently has unparalleled access to information and the ability to fact-check claims in real-time. Post-truth refers to the startling paradox that despite this immense potential for forming evidence-based opinions, many people seem to readily accept blatant falsehoods. Although the motivations that underlie such polarized responses to “facts” are complex, it is clear that we need to address them. Let us start by assessing the scale of the problem.

- EVALUATING THE SOCIETAL CONSEQUENCES

How bad is it?

Trust forms the basis of any good relationship, and when we examine public indicators of trust in science, it is reassuring to find that trust in science remains stable and very high, in the United States, the United Kingdom and the rest of Europe. Thus, in principle I sympathise with the view that the scale of the issue is somewhat blown out of proportion.

At the same time, I do not believe that “post-truth” is just another empty label, as there is indeed much to be concerned about. The spread of misinformation is a real threat to maintaining a well-informed populace, which forms the basis of any healthy democracy. Accordingly, the UK parliament has recently launched an investigation into the ways in which “fake news” might be undermining democracy. The conclusions of that report strike me as mixed, perhaps in part because it is difficult to quantify the scale of the problem. For example, a recent study found that only a fraction of Americans were exposed to fake news during the most recent US election, at least to the extent that it could lead to voter persuasion. However, the evidence base remains severely underdeveloped on this front.

Moreover, a survey from Pew research indicates that nearly 65% of Americans feel that fake news leaves them confused over basic facts. Interestingly, although many people feel quite confident that they would be able to tell the difference between real and fake news, a study from Channel 4 found that when put to the test, only 4% of British adults who took part were able to correctly identify false stories. Furthermore, psychological research shows that the more we are exposed to a story, the more likely we are to think it is true, this is known as the “illusory-truth effect”. In other words, if you repeat something often enough, people will start to believe it (e.g. the common myth that we only use 10% of our brains).

Echo Chambers, Filter Bubbles, and Moral Tribes

Another concern surrounds the “status of facts” in society. Instead of attending to evidence, people seem retreat into their cultural tribes and respond solely based on how they feel about an issue. I would say that on the whole, this is an inaccurate characterisation of human psychology. People selectively attend to information all the time, and this is completely normal, we simply cannot pay attention to everything so we hone in on the stories that are most interesting and relevant to us. On the more extreme end of the spectrum we find “motivated reasoning”, which is a defensive process that involves actively rejecting evidence that contradicts deeply-held personal convictions. Yet, people also have a strong motivation to hold accurate perceptions about the world. These different motivations can be in competition. Accordingly, the more important question is therefore one about context.

Unfortunately, social media platforms cause these fairly normal processes to go into overdrive. Most UK adults now consume their news online, and greater access to information via online news and social media fosters selective exposure to ideological content, resulting in a so-called “echo chamber” of like-minded opinions. Echo chambers limit exposure to views from the “other” side, and as such, they can fuel social extremism and group polarization. In addition, social media platforms such as Facebook use algorithms to selectively tailor newsfeeds and specifically recommend content based on a user’s previous click behaviour, resulting in “filter bubbles”. Importantly, the majority of the public is still not aware that they are the subject of so-called “microtargeting” campaigns, i.e. companies and political campaigners pay Facebook to target users with specific profiles with messages. It is difficult to produce “hard” evidence that echo chambers and filter bubbles are harming democracy. For example, Facebook has claimed, based on their own analyses, that the echo chamber effect is overhyped. Yet, there are some important discrepancies between the types of data that are available to the scientific community and social media companies. For example, scholars can typically only access publicly available data, which means that we only see a tiny snapshot of the behaviour of millions of people who are engaging with (fake) news stories on social media platforms. Without transparency and independent scientific evaluations, we remain limited in our ability to evaluate the full scope of the problem.

- EXPLORING PRACTICAL SOLUTIONS

A Vaccine Against Fake News

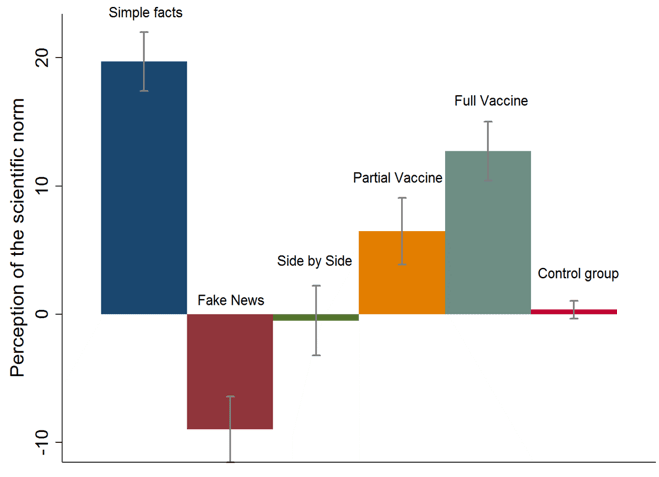

I initially started thinking about the vaccine metaphor when I came across some interesting work that showed how models from epidemiology could be adapted to model the viral spread of misinformation, i.e. how one false idea can rapidly spread from one mind to another within a network of interconnected individuals. This lead to the idea that it may be possible to develop a “mental” vaccine against fake news. It turns out that a psychologist named William McGuire had started a program of research at Yale University in the 60s exploring how attitudinal resistance to persuasion attempts could be induced using the same biological metaphor. To illustrate: injections that contain a weakened dose of a virus (vaccines) can confer resistance against future infection by activating the production of antibodies. Inoculation theory postulates that the same can be achieved with “mental antibodies”. In other words, by preemptively exposing people to a weakened version of an argument, and by subsequently refuting that argument, attitudinal can be conferred against persuasion. Although McGuire was interested in protecting beliefs about relatively innocuous matters (“cultural truisms”), my colleagues at Yale and I conceptualised that it might be possible to extend and adapt this approach to a context in which facts are heavily “contested”. Our study focused on disinformation about a very serious societal issue: climate change. In particular, there is a debunked petition that formed the basis of a viral fake news story that claimed that thousands of scientists had concluded that climate change is a hoax. In our study, we tried to inoculate the public against this bogus petition (Figure 1).

Essentially, we found that when we just communicated the simple fact to people that 97% of climate scientists have concluded that global warming is happening, most people shifted their perceptions in line with the science (blue bar). As expected, when we exposed people to the false petition, they shifted away from the conclusions of climate science (red bar). When exposed people to both stories side by side (reflecting the current media environment), the misinformation completely cancelled out the facts (green bar), highlighting the potency of fake news. In the inoculation conditions, we preemptively warned people that there are political actors who use misleading tactics to try to mislead the public (partial vaccine, orange bar) and we explained (in advance) that the petition contains false signatories (e.g. Charles Darwin). In both groups, people were much less influenced by the misinformation, preserving about one third and two thirds of the “facts”. What’s promising is that we observed these patterns across the political spectrum, reducing group polarisation.

Figure 1: Fake News Vaccine.

I have come to believe that the real power of the vaccine lies in its ability to be shared interpersonally. If we know of a falsehood and have the opportunity to help inoculate someone against an impending fake news story, the moral responsibility lies with us. In an ideal scenario, the social spread of the inoculation (both online and offline) could help create societal resistance, or “herd immunity” against fake news. Could the media help? This is a difficult question. While news organisations are at the forefront of what news is about to break, they are currently not in the business of inoculating people against fake news, primarily because the incentive structure of the media is such that there is a need to rank in clicks and pay the bills. In some sense, education may be the greatest inoculation. We have recently developed an educational “fake news” game that we have begun to pilot test in high schools. The purpose of the game is to inoculate students against fake news by letting them step into the shoes of different fake news producers. Of course, there are other solutions. Several European countries have started to fine social media companies for failing to remove defamatory fake news. Facebook has partnered with independent fact-checkers to help flag “disputed” content. Google is demoting fake news in their search results. Vaccines do not always offer full protection but the gist of inoculation is that we need to play offence rather than defence and that it’s better to prevent than cure. It is one tool, among many, to help each other navigate this brave new world.

FURTHER READING

van der Linden, S., Leiserowitz, A., Rosenthal, S., & Maibach, E. (2017). Inoculating the public against misinformation about climate change. Global Challenges, 1(2): 1600008.

McGuire, W. J. (1970). A Vaccine for Brainwash. Psychology Today, 3(9), 36-39.

Harriss, L., & Raymer, K. (2017). Online information and fake news. POSTnote 559. Parliamentary Office of Science and Technology. London, UK: House of Parliament.